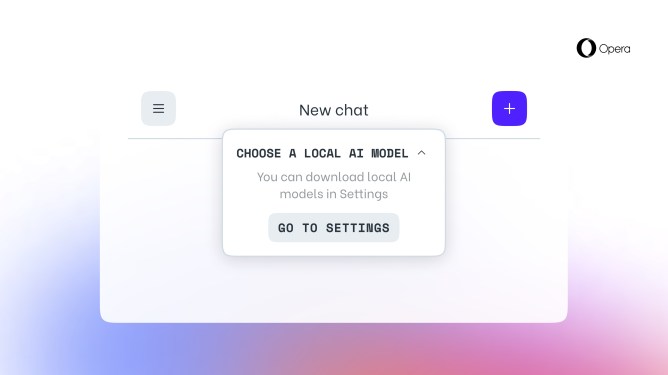

In a significant move, web browser company Opera has announced that it will now allow users to download and use large language models (LLMs) locally on their computer. This feature is first rolling out to Opera One users who receive developer stream updates and enables them to select from over 150 models from more than 50 families.

What are Large Language Models?

Large language models (LLMs) are a type of artificial intelligence technology that can process, understand, and generate human-like text. They have the ability to learn and improve their performance over time, making them highly useful for various applications such as language translation, content creation, and more.

Supported Models and Framework

The supported models include Llama from Meta, Gemma from Google, and Vicuna, among others. Opera is using the Ollama open-source framework in the browser to run these models locally on the user’s computer. Currently, all available models are a subset of Ollama’s library, but in the future, the company plans to include models from different sources.

How Does It Work?

When users select a model, it will be downloaded and stored locally on their system. Each variant can take up more than 2GB of space, so users should be careful with their free storage space to avoid running out of room. Opera is not taking any steps to save storage while downloading the models.

Future Plans and Benefits

Jan Standal, VP at Opera, mentioned in a statement that this feature has provided access to a large selection of third-party local LLMs directly in the browser for the first time ever. He also stated that as these models get more specialized for specific tasks, they are expected to reduce in size.

This feature is particularly useful for users who want to test various models locally without relying on online tools like Quora’s Poe and Hugging Chat. While it does take up significant storage space, it offers a convenient way to explore different models without worrying about internet connectivity or data transfer limits.

Opera’s AI Journey

Opera has been exploring AI-powered features since last year. The company launched an assistant called Aria located in the sidebar in May and introduced it to the iOS version in August. In January, Opera announced that it is building an AI-powered browser with its own engine for iOS as part of the EU’s Digital Market Acts (DMA) requirements.

Conclusion

Opera’s decision to enable local LLM support marks a significant step forward in making AI technology more accessible and user-friendly. With over 150 models from more than 50 families available, users can explore various applications and benefits without relying on online tools or cloud services. As this feature continues to evolve, it will be interesting to see how Opera addresses storage concerns and includes models from different sources.

Related Topics

- AI

- Apps

- Llama 2

- LLMs

- Opera

About the Author

Ivan Mehta is a global consumer tech reporter at TechCrunch. He has previously worked for publications like Huffington Post and The Next Web, and can be reached at im@ivanmehta.com.

Subscribe to Our Newsletters

Get the latest news and updates on AI, startups, and more by subscribing to our newsletters:

- TechCrunch Daily News: Get the best of TechCrunch’s coverage every weekday and Sunday.

- TechCrunch AI: Stay up-to-date with the latest developments in AI.

- Startups Weekly: Get the core of TechCrunch delivered weekly.

No Newsletters Selected

Subscribe to our newsletters by clicking on the links above. By submitting your email, you agree to our Terms and Privacy Notice.